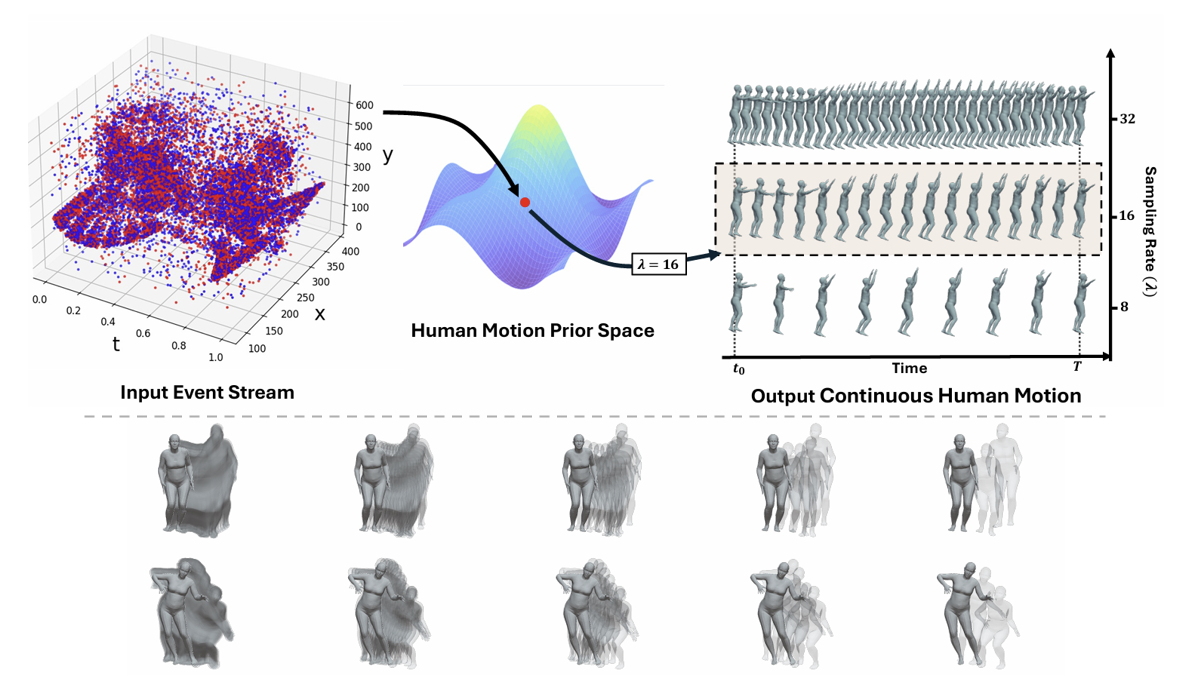

This paper tackles the challenge of estimating a continuous-time human motion field from an event stream. Current Human Mesh Recovery (HMR) methods predominantly use frame-based approaches, which are susceptible to aliasing and inaccuracies caused by limited temporal resolution and motion blur. In contrast, we propose a method to predict a continuous-time human motion field directly from events. Our approach employs a recurrent feed-forward neural network to model human motion in the latent space of possible movements. Previous state-of-the-art event-based methods rely on computationally expensive optimizations over a fixed number of poses at high frame rates, which become impractical as temporal resolution increases. Instead, we introduce the first method to replace traditional discrete-time predictions with a continuous human motion field, represented as a time-implicit function that supports parallel pose queries at arbitrary temporal resolutions.

Continuous-Time Human Motion Field from Events

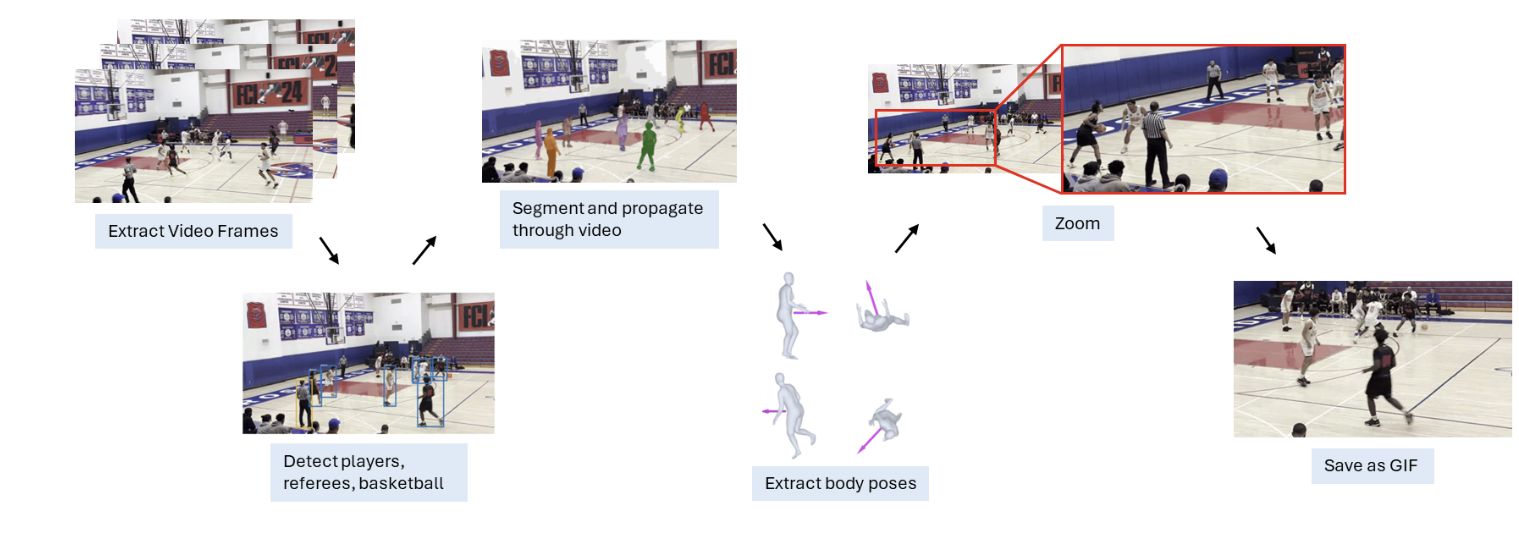

Auto-Zooming Camera in Basketball Game

Basketball games often use only half of the court at a time, requiring the camera to pan and zoom to follow the players' movements. This project aims to develop a system for automated zooming in basketball games. The system benefits producers by automatically tracking players to generate high-quality video streams and assists coaches by focusing on key players for game analysis. Solution: 1. Object Detection: The system detects key elements—players, referees, and the basketball—using models such as YOLOv11 trained on basketball data or the Grounding DINO model. 2. Tracking: Accurate tracking ensures continuity even when object detection fails or misidentifies objects in certain frames. 3. Pose Estimation: Player pose data is used to enhance tracking accuracy, refine zoom effects, and predict transitions when tracking falters. 4. Zooming: The system constructs a bounding box based on segmented objects and adjusts the zoom to center it while maintaining frame constraints. Smooth transitions between frames are achieved through interpolation, guided by pose estimation data and changes in zoom values. This integrated approach ensures seamless, high-quality video output tailored for both streaming and analysis purposes.

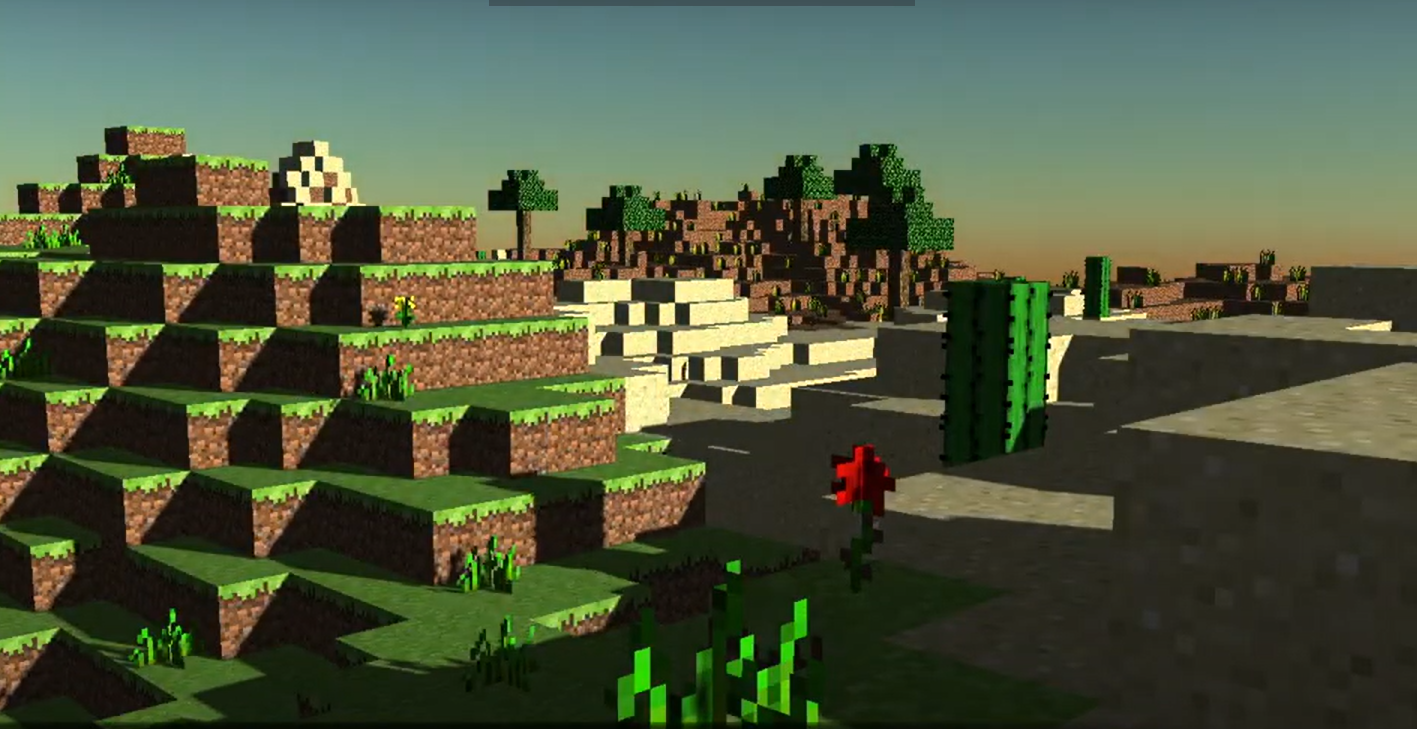

Self-made MineCraft sandbox physical engine

This project is a procedurally generated, multi-biome 3D world featuring a range of environments and enhanced visual effects. The core landscape includes grassland, desert, mountainous, sandy, and water biomes that blend seamlessly using procedural noise functions like fbm and Perlin noise. Our team utilized specialized parameters for each biome to create unique elevations, smoother or sharper terrain features, and biome-specific aesthetics. Underground, 3D Perlin noise generates cave systems with moisture-based water levels, adding realism. For optimized rendering, a terrain chunking system dynamically loads and unloads blocks as players navigate. Multithreading was implemented to ensure efficient terrain generation and memory management, with separate threads managing block and VBO data. Player physics, including collision detection and adjustments for water and lava traversal, were refined with raycasting to support smoother interactions. These environments respond to the player's movement, adding features like camera tinting when submerged in water or lava, along with slowed movement and reduced jump forces for an immersive experience. To manage player resources, a GUI-based inventory and toolbar were designed, enabling players to select and track blocks. The toolbar provides quick access, while the inventory system allows players to add and remove block types. Special effects such as Blinn-Phong shading and animated water waves heighten visual appeal, and a day-night cycle creates dynamic lighting across different times of the day, enhancing the world’s ambiance and realism. Together, these elements form a dynamic game environment that blends procedural generation with optimized rendering and user-friendly controls.

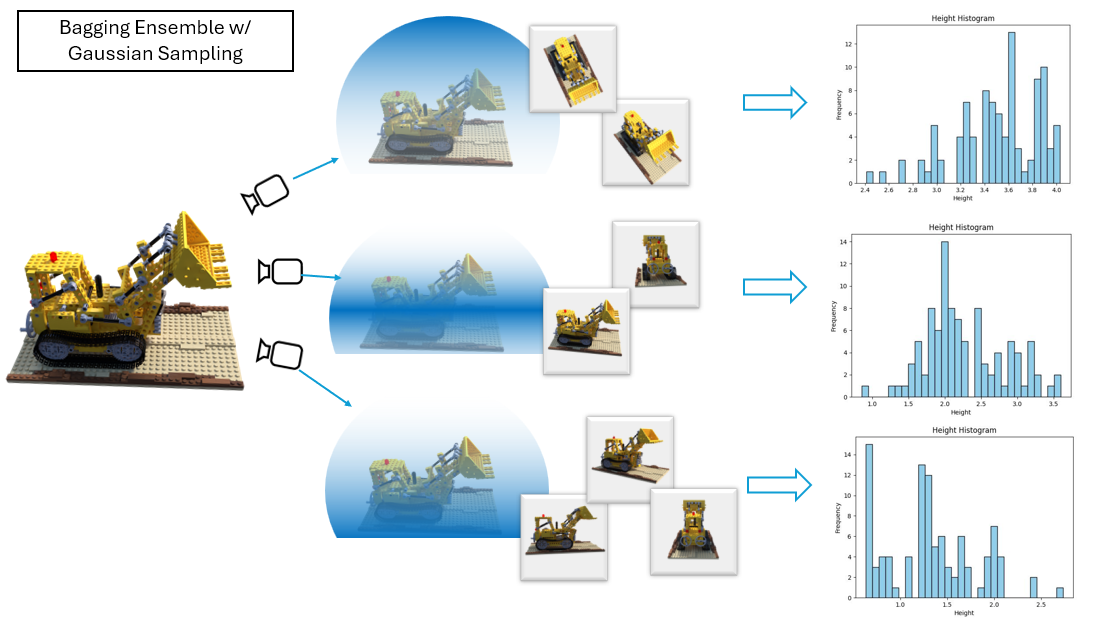

NerF Rendering with Ensemble Learning

The project I led for applied machine learning final project enhances Neural Radiance Fields (NeRF) for generating unseen views of objects from images captured at multiple angles. Inspired by ensemble learning, we introduces two strategies: Random Initialization Ensembles (RIE) and Bagging Ensembles. RIE trains multiple TinyNeRF models with different hyperparameters, then aggregates their outputs using Averaging, Soft Voting, and Hard Voting. The Bagging approach samples data subsets randomly, training a diverse TinyNeRF ensemble from scratch. By adopting Gaussian distribution sampling over uniform distribution, Bagging ensures denser data near the mean, enabling models to perform better in key spatial regions. Position-conditioned aggregation further maximizes this by specializing model predictions in specific spatial zones. Results demonstrate that these ensemble techniques and sampling strategies substantially improve NeRF’s accuracy and robustness in 3D reconstruction.

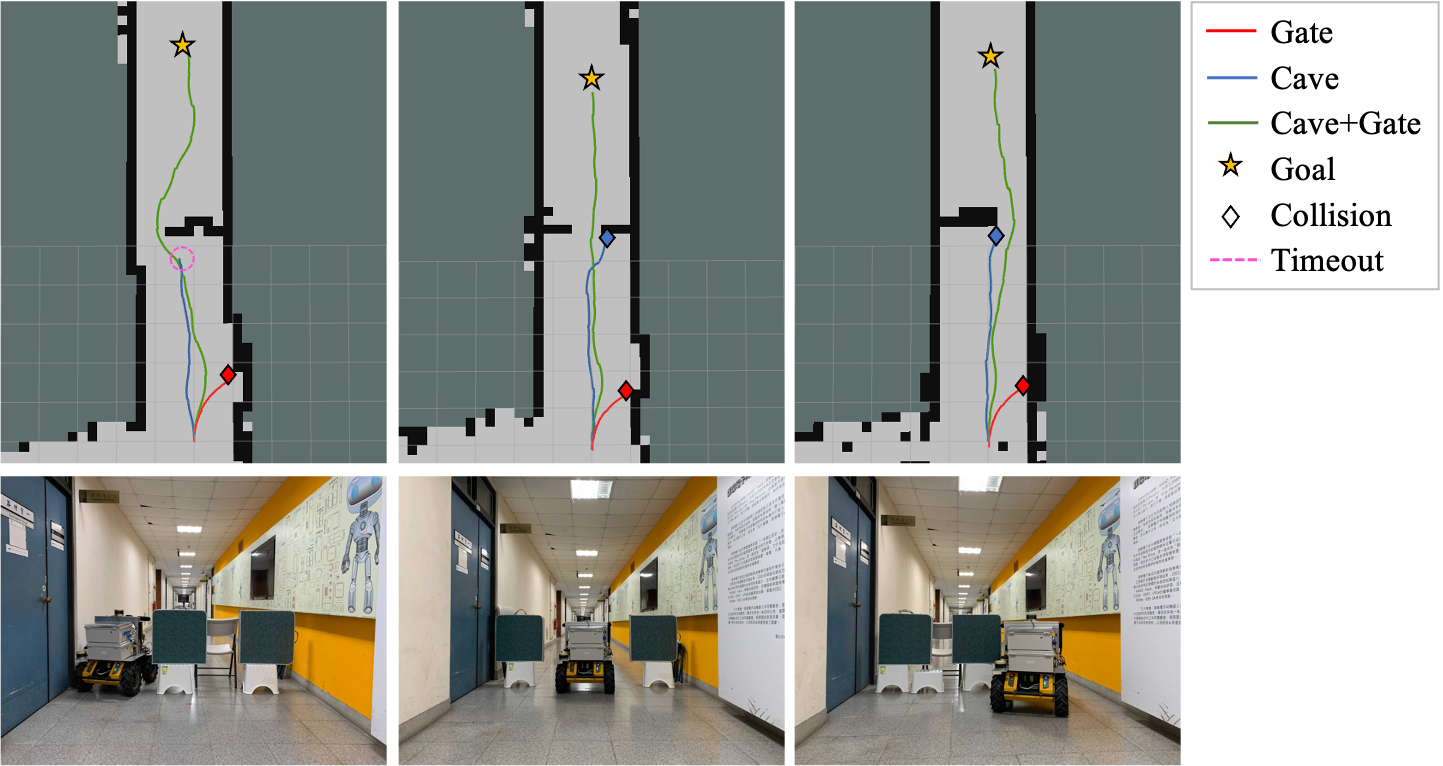

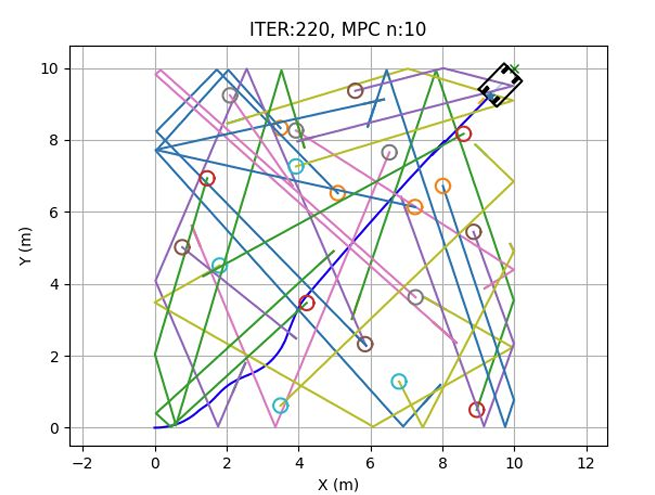

Obstacle Avoidance in Dense Environments using MPC

This project explores Model Predictive Contouring Control (MPCC) to enhance local planning for mobile robots navigating dynamic and unstructured environments. Recognizing the challenges robots face in non-convex, crowded spaces—such as the risk of collisions or the "freezing robot" problem—the project implemented MPCC with specialized constraints for static and dynamic obstacle avoidance. Static obstacles were approximated with convex rectangular regions, while dynamic obstacles used Euclidean distance metrics in a filtered "obstacle window," focusing only on imminent threats. Testing revealed that MPCC outperformed the Dynamic Window Approach (DWA) in safety, particularly in a challenging environment with 10 dynamic obstacles. Notably, filtering obstacles by obstacle window led to safer navigation, showcasing MPCC’s robustness and adaptability in high-risk settings.

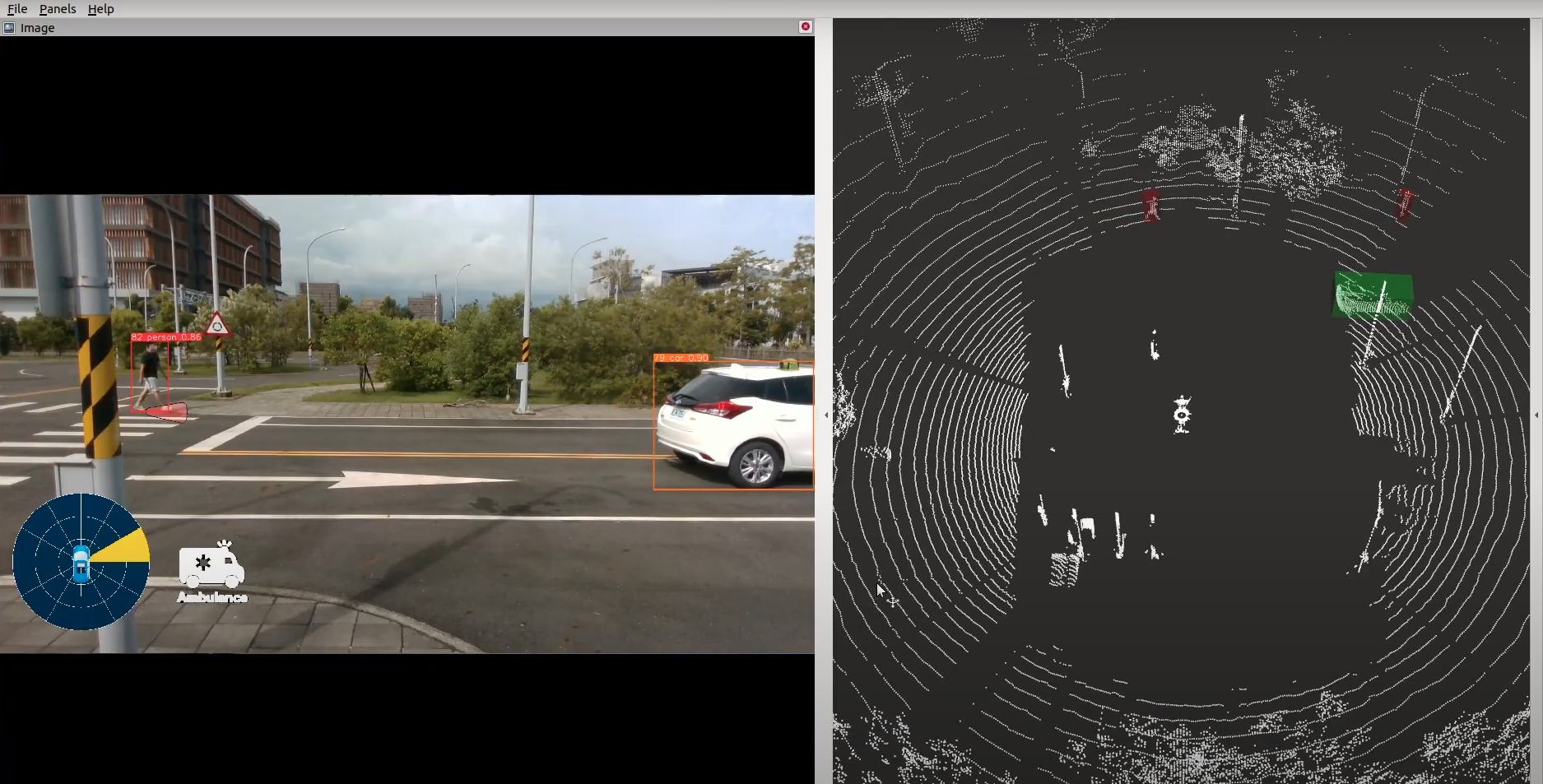

Vision Assistive System for Pedestrian prediction and Special Vehicle Detection

We proposed a integrated assistive system fusing vision, Lidar, sound to predict pedestrians’ movement and recognize directions of specific vehicles such as ambulance. Constructed real‐time data pipelines on Robot Operation System (ROS) This project was funded by MOST to develop a powerful self-driving car assistance system. Our goal was to build a sub-system to assist self-driving cars predict pedestrians’ intent from vision and LiDAR to avoid safety danger and recognize particular vehicle sounds such as ambulance sound. Constructed on Robot Operating System (ROS), our process can run at high speed and be implemented to real-time applications.

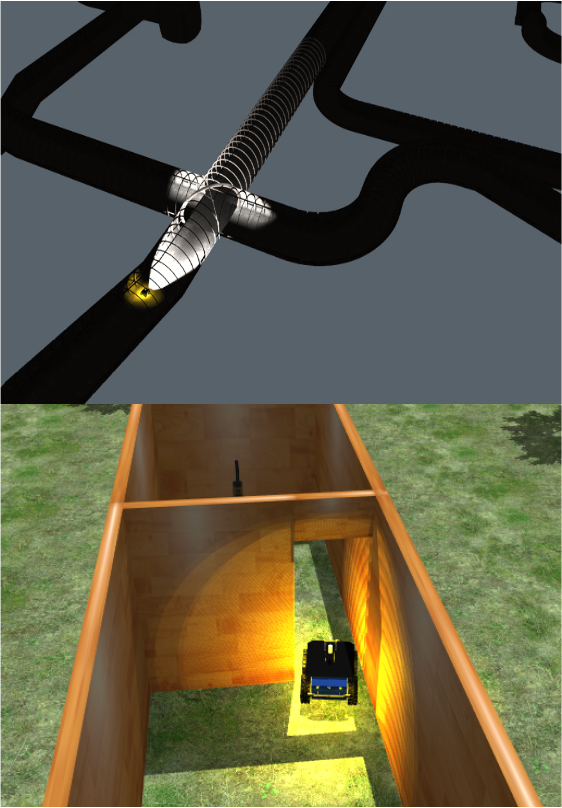

DARPA SubT Urban Challenge

The DARPA Subterranean (SubT) Challenge aims to develop innovative technologies that would augment operations underground. The SubT Challenge will explore new approaches to rapidly map, navigate, search, and exploit complex underground environments. I participated after the Urban challenge with my team and our robots in a decommissioned nuclear power plant. Publicationstrong>: C.‐L Lu*, J.‐T. Huang*, C.‐I Huang, Z.‐Y. Liu, C.‐C. Hsu, Y.‐Y. Huang, S.‐C. Huang, P.‐K. Chang, Z. L. Ewe, P.‐J. Huang, P.‐L. Li, B.‐H. Wang, L.‐S. Yim, S.‐W. Huang, M.‐S Bai, H.‐C. Wang. ”A Heterogeneous Unmanned Ground Vehicle and Blimp Robot Team for Search and Rescue using Data‐driven Autonomy and Communication‐aware Navigation,” In Field Robotics ‐ Special Issue: Advancements and lessons learned during Phase I II of the DARPA Subterranean Challenge. 2021

Deep Reinforcement Learning in Simulation